Translating Visuals into Words: Image Captioning with AI

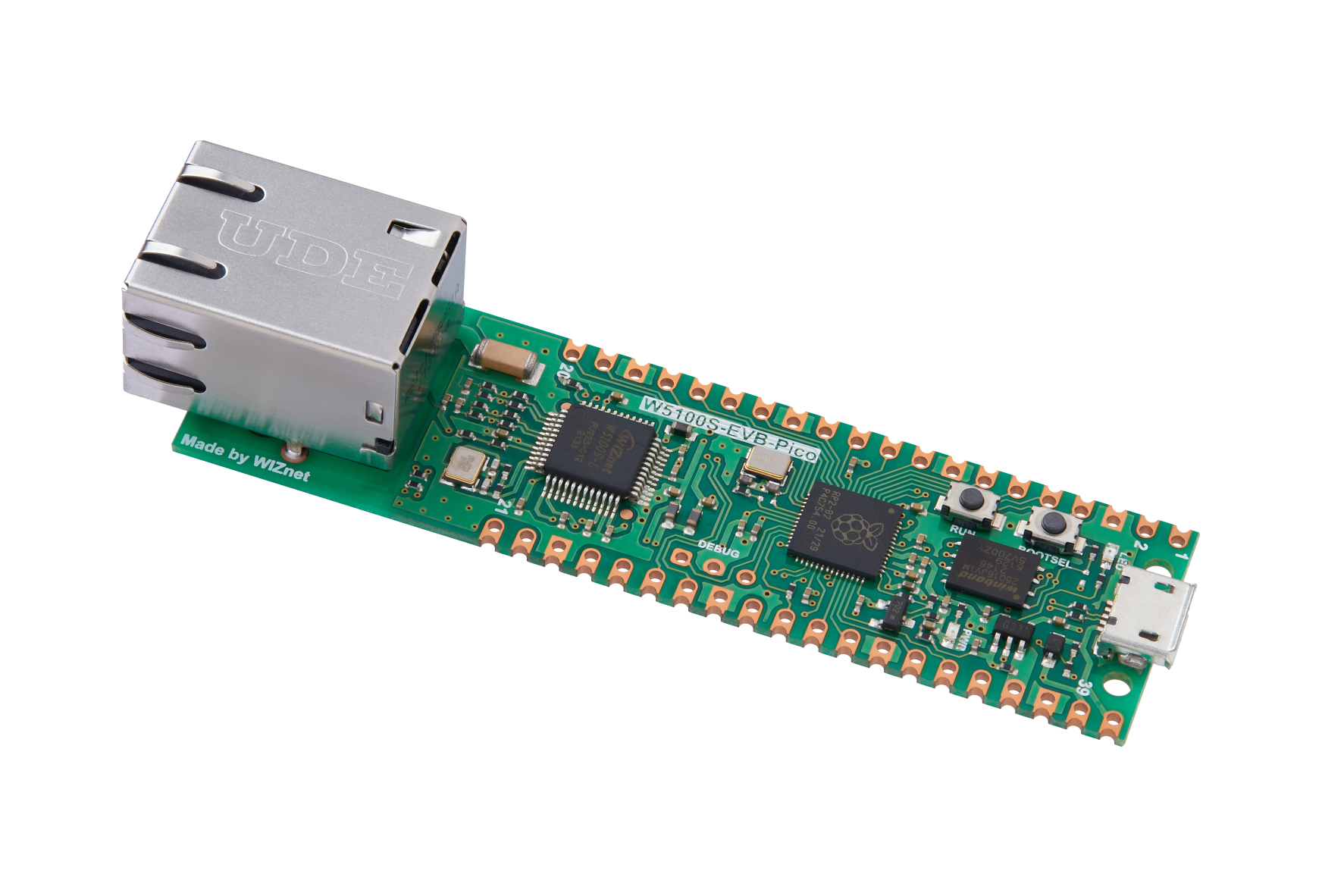

basic project conducted with the W5100S-EVB-PICO board, utilizing a replicated image captioning model to generate descriptions for images

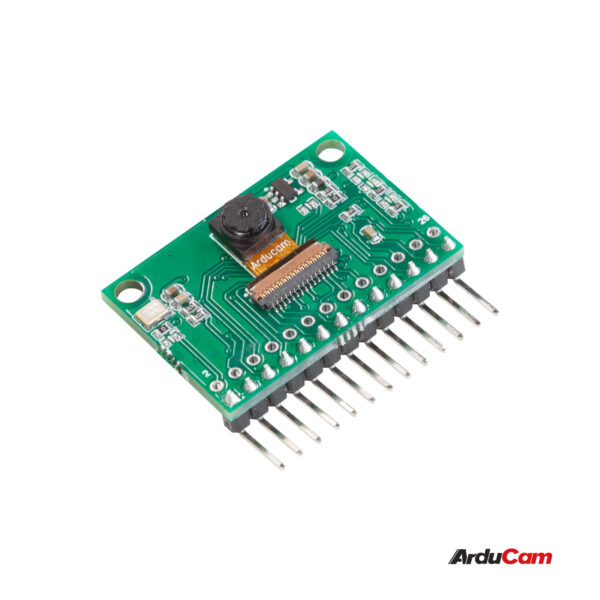

Arducam - HM0360 Camera Module

x 1

Overview

In this project, we are using two W5100S-EVB-PICO boards.

1.The first board connects an Arducam and Ethernet to serve the role of transmitting a picture to a web page upon receiving a web request.

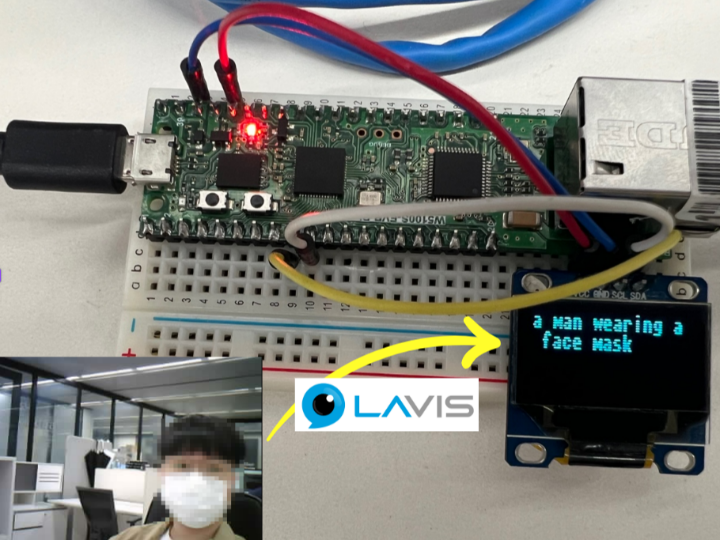

2.The second board will perform image-to-text captioning via the "Replicate API" in the form of a web address serving images from the first PICO board over an Ethernet connection and display them on the ssd1306 OLED screen.

Discuss this in more detail below.

Model used for image captioning

BLIP-2 is a part of Salesforce's LAVIS project. BLIP-2 is a generic and efficient pre-training strategy that leverages the advancements of pretrained vision models and large language models (LLMs). BLIP-2 surpasses Flamingo in zero-shot VQAv2 (scoring 65.0 vs 56.3) and sets a new state-of-the-art in zero-shot captioning (achieving a CIDEr score of 121.6 on NoCaps compared to the previous best of 113.2). When paired with powerful LLMs such as OPT and FlanT5, BLIP-2 unveils new zero-shot instructed vision-to-language generation capabilities for a range of intriguing applications.

Installation and How to Use

in progress

Outputs

Potential Developments

The current focus of this project is on captioning an image and displaying the text. However, by adding speakers in the future, this could evolve into applications for the visually impaired or anomaly detection, among various other project ideas.

Contributions and Feedback

Contributions and feedback on the project are always welcome. Please use the GitHub issue tracker to report issues or create pull requests.

Original link

-

circuitpython - arducam

I modified the code a bit to get a more stable connection.

-

micropython-captioning

Code using the API for IMG2TXT captioning