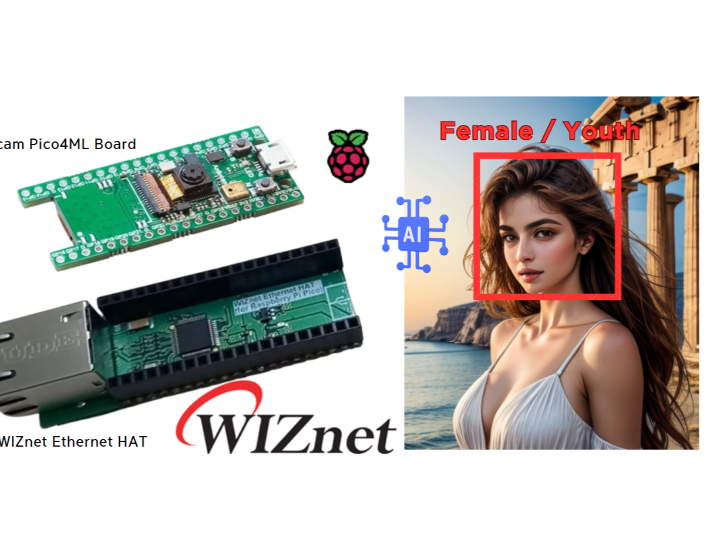

Embedded Age & Gender Detection with Raspberry Pi Pico & WIZnet Ethernet HAT

Embedded Age & Gender Detection

with Raspberry Pi Pico & WIZnet Ethernet HAT

Software Apps and online services

Datasets : https://susanqq.github.io/UTKFace/

Training notebook : https://github.com/wiznetmaker/Embedded-Age-Gender-Detection-with-Raspberry-Pi-Pico-WIZnet-Ethernet-HAT/blob/main/age-gender-detection-multi-model-prediction.ipynb

Have you ever wondered about the demographics of people visiting your store or passing by your digital signage? This Raspberry Pi Pico-powered solution can help you detect the age group and gender of individuals in real-time. In this tutorial, we'll explore how computer vision and an embedded machine learning (ML) age and gender classification model can be deployed to an Arm Cortex-M0+ based Raspberry Pi Pico board with a WIZnet Ethernet HAT to analyze images captured by a camera.

The system will run continuously and:

- Capture an image from a camera module connected to the Raspberry Pi Pico board.

- Use the captured image as input to an ML model that detects the age group and gender of the person in the image.

- Smooth the ML model's output using an exponential smoothing algorithm.

- Send the processed data to a server or display it on a connected screen.

To preserve privacy, all ML inferencing will be done on the board's Arm Cortex-M0+ processor using the TensorFlow Lite for Microcontrollers (TFLM) library. The application running on the Pico board will only send the age group and gender data over Ethernet using the WIZnet Ethernet HAT.

The Raspberry Pi Pico will be connected to an Arducam 5MP Plus Camera Module, which will capture images at a resolution of 640x480 pixels. The camera module will be connected to the Pico board using the UART interface.

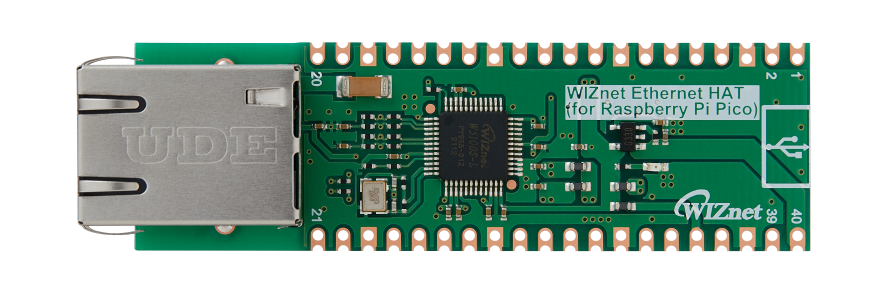

The WIZnet Ethernet HAT will be used to provide Ethernet connectivity to the Raspberry Pi Pico. This will allow the Pico to send the processed age and gender data to a server or display it on a connected screen.

The ML model will be trained on the UTKFace dataset, which contains over 20,000 face images with annotations of age, gender, and ethnicity. The dataset will be preprocessed and split into training and validation sets.

The ML model will use the MobileNet V1 architecture with an alpha of 0.25, similar to the person detection example in TensorFlow Lite for Microcontrollers (TFLM). The model will be modified to output the age group and gender of the detected face.

A Jupyter Notebook will be used to train the custom MobileNet V1 model on the UTKFace dataset. The notebook will cover the following steps:

Dataset processing: Download and preprocess the UTKFace dataset, and split it into training and validation sets.

# 데이터 전처리 함수

def preprocess_data(image_path, age_label, gender_label):

image = tf.io.read_file(image_path)

image = tf.image.decode_jpeg(image, channels=3)

image = tf.image.resize(image, (224, 224))

image = tf.keras.applications.mobilenet.preprocess_input(image)

age_label = tf.one_hot(age_label, depth=7) # 연령대 클래스 수에 맞게 one-hot 인코딩

gender_label = tf.one_hot(gender_label, depth=2) # 성별 클래스 수에 맞게 one-hot 인코딩

return image, (gender_label, age_label) # 성별 레이블과 연령대 레이블을 튜플로 반환

# 데이터 전처리 적용

dataset = dataset.map(preprocess_data)

# 데이터셋 분할

train_size = int(0.8 * len(dataset))

val_size = int(0.1 * len(dataset))

test_size = len(dataset) - train_size - val_size

train_dataset = dataset.take(train_size)

remaining_dataset = dataset.skip(train_size)

val_dataset = remaining_dataset.take(val_size)

test_dataset = remaining_dataset.skip(val_size)

# 배치 크기 설정

batch_size = 32

train_dataset = train_dataset.batch(batch_size)

val_dataset = val_dataset.batch(batch_size)

test_dataset = test_dataset.batch(batch_size)

# 데이터 증강

data_augmentation = tf.keras.Sequential([

tf.keras.layers.RandomFlip("horizontal", seed=42),

tf.keras.layers.RandomRotation(0.2, seed=42),

tf.keras.layers.RandomZoom(0.2, seed=42),

tf.keras.layers.RandomContrast(0.3, seed=42),

tf.keras.layers.RandomBrightness(0.3, seed=42),

tf.keras.layers.GaussianNoise(0.01, seed=42),

])

# 데이터 증강

data_augmentation = tf.keras.Sequential([

tf.keras.layers.RandomFlip("horizontal", seed=42),

tf.keras.layers.RandomRotation(0.2, seed=42),

tf.keras.layers.RandomZoom(0.2, seed=42),

tf.keras.layers.RandomContrast(0.3, seed=42),

tf.keras.layers.RandomBrightness(0.3, seed=42),

tf.keras.layers.GaussianNoise(0.01, seed=42),

])ML model creation: Create a MobileNet V1 model with an input size of (96, 96, 3), alpha of 0.25, dropout of 0.1, and output classes for age group and gender.

# MobileNet 모델 생성

ALPHA = 0.25

DROPOUT = 0.10

mobilenet_025_224 = tf.keras.applications.mobilenet.MobileNet(

input_shape=(224, 224, 3),

alpha=ALPHA,

dropout=DROPOUT,

weights='imagenet',

pooling='avg', # pooling을 'avg'로 설정하여 GlobalAveragePooling2D를 대신함

include_top=False

)

# 최종 모델 생성

num_classes = 2 # 성별 클래스 수 (남, 여)

age_range_classes = 7 # 나이대 클래스 수 (영아, 유아, 아동, 청소년, 청년, 중년, 노년)

inputs = tf.keras.Input(shape=(224, 224, 3))

x = data_augmentation(inputs)

x = mobilenet_025_224(x)

gender_output = tf.keras.layers.Dense(num_classes, activation='softmax', name='gender')(x)

age_output = tf.keras.layers.Dense(age_range_classes, activation='softmax', name='age')(x)

model = tf.keras.Model(inputs=inputs, outputs=[gender_output, age_output])

# 모델 컴파일

model.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.001),

loss={

'gender': 'categorical_crossentropy',

'age': 'categorical_crossentropy'

},

metrics=['accuracy']

)

# 콜백 설정

callbacks = [

tf.keras.callbacks.EarlyStopping(

monitor='val_loss',

patience=3,

verbose=1,

restore_best_weights=True,

),

tf.keras.callbacks.ModelCheckpoint(

filepath='best_model.h5',

monitor='val_loss',

save_best_only=True,

verbose=1,

),

]

# 모델 학습

epochs = 10

history = model.fit(

train_dataset,

validation_data=val_dataset,

epochs=epochs,

callbacks=callbacks,

)

# 모델 평가

loss, gender_loss, age_loss, gender_accuracy, age_accuracy = model.evaluate(test_dataset)

print("Test loss:", loss)

print("Test gender loss:", gender_loss)

print("Test age loss:", age_loss)

print("Test gender accuracy:", gender_accuracy)

print("Test age accuracy:", age_accuracy)

# 모델 저장

model.save('age_gender_model.h5')Result

Test images

ML model exporting: Convert the trained model to TensorFlow Lite format using quantization for efficient inferencing on the Pico board.

import tensorflow as tf

def reprPresentative_dataset():

for image, _ in val_ds_96_96.take(100):

yield [tf.image.resize(image, (224, 224))] # 입력 크기를 (224, 224)로 조정

converter = tf.lite.TFLiteConverter.from_keras_model(loaded_model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.uint8

converter.inference_output_type = tf.float32

tflite_quant_model = converter.convert()

with open('age_gender_model.tflite', 'wb') as f:

f.write(tflite_quant_model)

import os

import subprocess

# TensorFlow Lite 모델을 C++ 헤더 파일로 변환

with open('age_gender_model.h', 'w') as f:

f.write('alignas(8) const unsigned char tflite_model[] = {\n')

process = subprocess.Popen(['xxd', '-i', 'age_gender_model.tflite'], stdout=subprocess.PIPE)

while True:

output = process.stdout.readline().decode('utf-8')

if output == '' and process.poll() is not None:

break

if output:

f.write(output)

f.write('};\n')

f.write('const int tflite_model_len = {};\n'.format(os.path.getsize('age_gender_model.tflite')))

print("TensorFlow Lite model converted to C++ header file.")The application on the Pico board will perform the following steps: // Initialize the camera and capture an image initCamera(); captureImage();

// Perform ML inferencing with the captured image runInference();

// Smooth the predictions from the ML model smoothPredictions();

// Send the processed age group and gender data over Ethernet sendDataOverEthernet();

This guide demonstrated how a custom age and gender classification model could be trained on the UTKFace dataset and deployed to a Raspberry Pi Pico board with a WIZnet Ethernet HAT. The Pico board captures images from a camera module, performs on-device ML inferencing, and sends the processed age group and gender data over Ethernet. This project can be further extended to analyze customer demographics in retail stores or to provide targeted content on digital signage based on the age and gender of the audience.

For more details and in-depth information about this project, please visit: WIZnetmaker Github