TinyML(1) - Beginning TinyML with W5500 evb pico

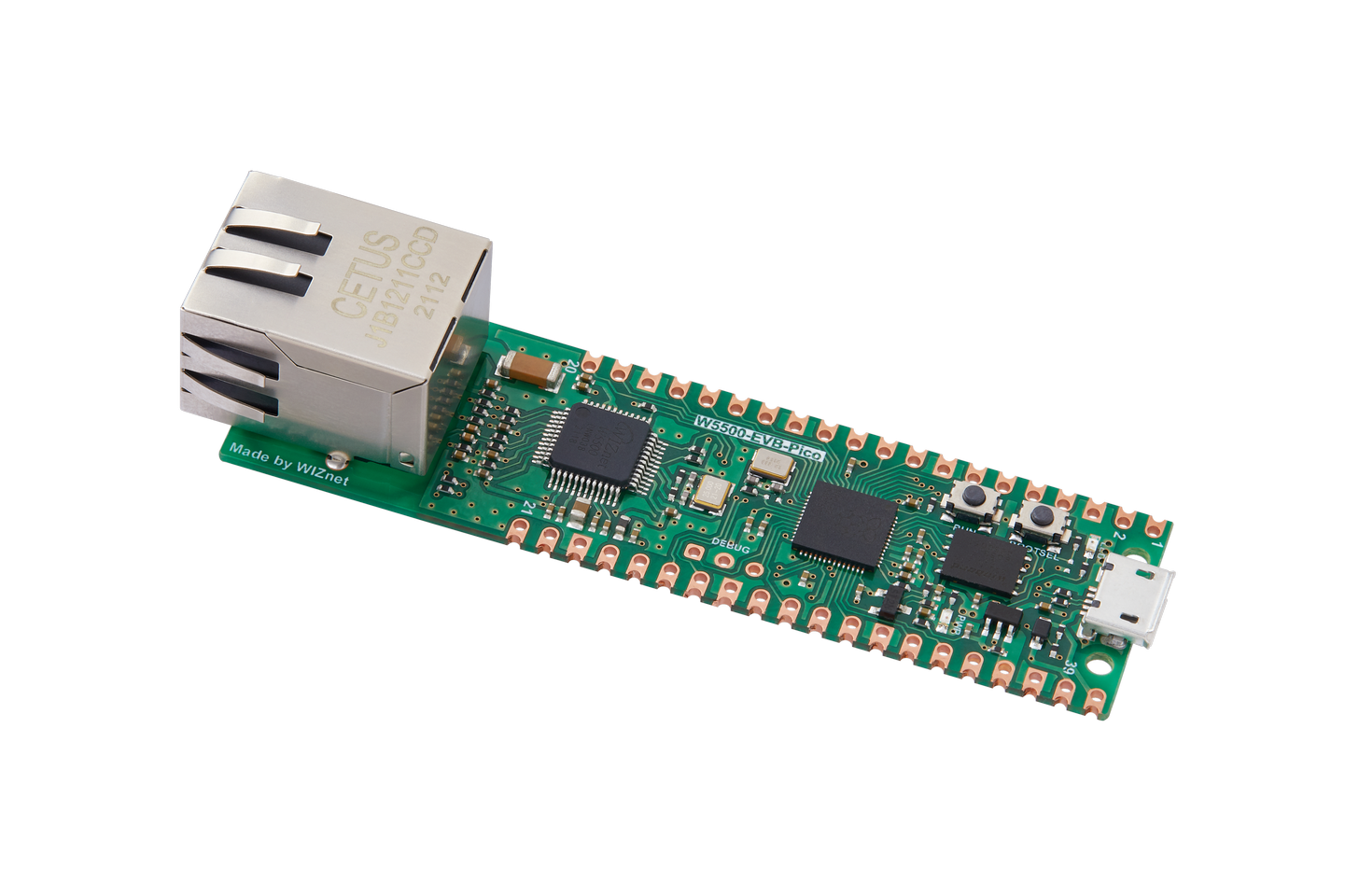

Sine wave prediction and LED control using TensorFlow Lite and W5500-EVB-Pico

tensorflow - tensorflow-lite

x 1

Generate data

This project focuses on predicting Sine Waves and controlling LEDs in real time. The main goal is to develop a model to predict sine waves using TensorFlow, and quantize this model into TensorFlow Lite format to reduce memory usage and computational cost. This process maximizes the model's efficiency and allows it to run in real time on real hardware. A key part of the project is controlling the LEDs based on predicted sine wave data using the W5500-EVB-Pico board. This board runs TensorFlow Lite models and provides the computing power needed to process data in real time and light the LED in a sine wave. This provides a solution suitable for applications that require complex data processing and real-time response.

data generation

# We'll generate this many sample datapoints

SAMPLES = 1000

# Set a "seed" value, so we get the same random numbers each time we run this

# notebook

np.random.seed(1337)

# Generate a uniformly distributed set of random numbers in the range from

# 0 to 2π, which covers a complete sine wave oscillation

x_values = np.random.uniform(low=0, high=2*math.pi, size=SAMPLES)

# Shuffle the values to guarantee they're not in order

np.random.shuffle(x_values)

# Calculate the corresponding sine values

y_values = np.sin(x_values)

# Add a small random number to each y value

y_values += 0.1 * np.random.randn(*y_values.shape)

# Plot our data

plt.plot(x_values, y_values, 'b.')

plt.show()

Generate sine wave data and configure a data set for machine learning by giving an error value of +-0.1.

Create a neural network model

model_2 = tf.keras.Sequential()

# First layer takes a scalar input and feeds it through 16 "neurons". The

# neurons decide whether to activate based on the 'relu' activation function.

model_2.add(layers.Dense(16, activation='relu', input_shape=(1,)))

# The new second layer may help the network learn more complex representations

model_2.add(layers.Dense(16, activation='relu'))

# Final layer is a single neuron, since we want to output a single value

model_2.add(layers.Dense(1))

# Compile the model using a standard optimizer and loss function for regression

model_2.compile(optimizer='rmsprop', loss='mse', metrics=['mae'])

history_2 = model_2.fit(x_train, y_train, epochs=600, batch_size=16,

validation_data=(x_validate, y_validate))

predicted results

# Calculate and print the loss on our test dataset

loss = model_2.evaluate(x_test, y_test)

# Make predictions based on our test dataset

predictions = model_2.predict(x_test)

# Graph the predictions against the actual values

plt.clf()

plt.title('Comparison of predictions and actual values')

plt.plot(x_test, y_test, 'b.', label='Actual')

plt.plot(x_test, predictions, 'r.', label='Predicted')

plt.legend()

plt.show()

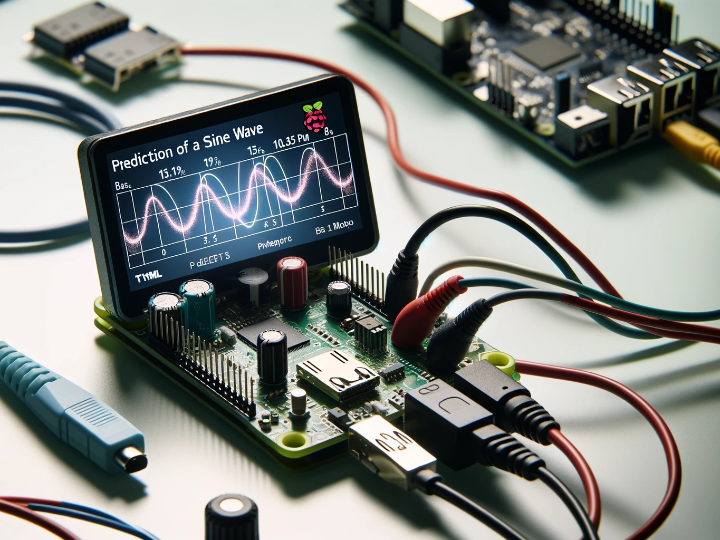

This is a graph depicting evaluation data and prediction data. If you look at the predicted graph, it appears to have quite a sinusoidal shape.

Convert to quantized model

# Convert the model to the TensorFlow Lite format with quantization

converter = tf.lite.TFLiteConverter.from_keras_model(model_2)

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_model = converter.convert()

# Save the model to disk

open("sine_model_quantized.tflite", "wb").write(tflite_model)

# Linux

# Install xxd if it is not available

!apt-get -qq install xxd

# Save the file as a C source file

!xxd -i sine_model_quantized.tflite > sine_model_quantized.cc

# Print the source file

!cat sine_model_quantized.cc

The existing tensorflow model expresses normalized data as a 32-bit floating point number, but the tensorflow lite model expresses normalized data as an 8-bit integer. At the expense of lowering data normalization accuracy, theoretical performance improvement of up to 4 times is achieved.

Quantized result

unsigned char sine_model_quantized_tflite[] = {

0x18, 0x00, 0x00, 0x00, 0x54, 0x46, 0x4c, 0x33, 0x00, 0x00, 0x0e, 0x00,

0x18, 0x00, 0x04, 0x00, 0x08, 0x00, 0x0c, 0x00, 0x10, 0x00, 0x14, 0x00,

0x0e, 0x00, 0x00, 0x00, 0x03, 0x00, 0x00, 0x00, 0x10, 0x0a, 0x00, 0x00,

0xb8, 0x05, 0x00, 0x00, 0xa0, 0x05, 0x00, 0x00, 0x04, 0x00, 0x00, 0x00,

0x0b, 0x00, 0x00, 0x00, 0x90, 0x05, 0x00, 0x00, 0x7c, 0x05, 0x00, 0x00,

0x24, 0x05, 0x00, 0x00, 0xd4, 0x04, 0x00, 0x00, 0xc4, 0x00, 0x00, 0x00,

0x74, 0x00, 0x00, 0x00, 0x24, 0x00, 0x00, 0x00, 0x1c, 0x00, 0x00, 0x00,

0x14, 0x00, 0x00, 0x00, 0x0c, 0x00, 0x00, 0x00, 0x04, 0x00, 0x00, 0x00,

0x54, 0xf6, 0xff, 0xff, 0x58, 0xf6, 0xff, 0xff, 0x5c, 0xf6, 0xff, 0xff,

0x60, 0xf6, 0xff, 0xff, 0xc2, 0xfa, 0xff, 0xff, 0x04, 0x00, 0x00, 0x00,

...

...

0x00, 0x00, 0x0a, 0x00, 0x0c, 0x00, 0x07, 0x00, 0x00, 0x00, 0x08, 0x00,

0x0a, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x09, 0x03, 0x00, 0x00, 0x00

};

unsigned int sine_model_quantized_tflite_len = 2640;It is quantized data. Data enters the sine_model_quantized_tflite array as an 8-bit integer.

Summary of major C functions

void setup() {

// Set up logging. Google style is to avoid globals or statics because of

// lifetime uncertainty, but since this has a trivial destructor it's okay.

// NOLINTNEXTLINE(runtime-global-variables)

static tflite::MicroErrorReporter micro_error_reporter;

error_reporter = µ_error_reporter;

// Map the model into a usable data structure. This doesn't involve any

// copying or parsing, it's a very lightweight operation.

model = tflite::GetModel(g_sine_model_data);

if (model->version() != TFLITE_SCHEMA_VERSION) {

TF_LITE_REPORT_ERROR(error_reporter,

"Model provided is schema version %d not equal "

"to supported version %d.",

model->version(), TFLITE_SCHEMA_VERSION);

return;

}

// This pulls in all the operation implementations we need.

// NOLINTNEXTLINE(runtime-global-variables)

static tflite::ops::micro::AllOpsResolver resolver;

// Build an interpreter to run the model with.

static tflite::MicroInterpreter static_interpreter(

model, resolver, tensor_arena, kTensorArenaSize, error_reporter);

interpreter = &static_interpreter;

// Allocate memory from the tensor_arena for the model's tensors.

TfLiteStatus allocate_status = interpreter->AllocateTensors();

if (allocate_status != kTfLiteOk) {

TF_LITE_REPORT_ERROR(error_reporter, "AllocateTensors() failed");

return;

}void loop() {

// Calculate an x value to feed into the model. We compare the current

// inference_count to the number of inferences per cycle to determine

// our position within the range of possible x values the model was

// trained on, and use this to calculate a value.

float position = static_cast<float>(inference_count) /

static_cast<float>(kInferencesPerCycle);

float x_val = position * kXrange;

// Place our calculated x value in the model's input tensor

input->data.f[0] = x_val;

// Run inference, and report any error

TfLiteStatus invoke_status = interpreter->Invoke();

if (invoke_status != kTfLiteOk) {

TF_LITE_REPORT_ERROR(error_reporter, "Invoke failed on x_val: %f\n",

static_cast<double>(x_val));

return;

}Set W5500-EVB-PICO in the setup and loop functions. Set up logging, load the model, set up the interpreter, and allocate memory. Afterwards, the code is repeatedly executed in the loop function.

Create makefile

make -f {tensorflow git directory} Makefile sinWaves

binary output result

When you build through make, you can see the x and y values being output.

Build on Arduino

This is the result of running it on Arduino IDE.

Result

It comes out well, similar to a sin wave.